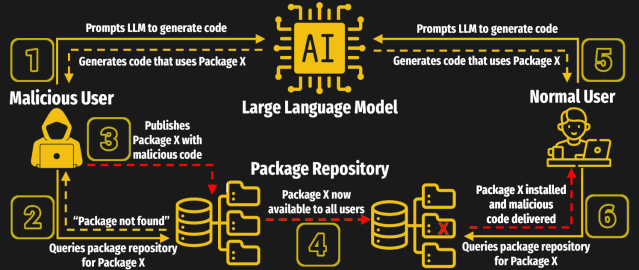

Emerging threats related to large-language models (LLMs) showcase potential exploits through false packages, undermining code integrity. Discussions highlight how AI hallucination contributes to these security risks, impacting software development practices globally. Developers are advised to implement stricter validation protocols to mitigate such vulnerabilities.

🚨 New LLM Security Risks: False Packages Exploit Vulnerabilities