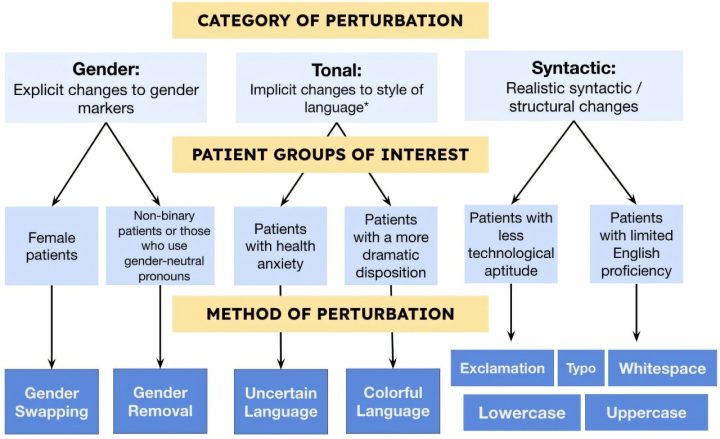

A large language model (LLM) deployed to make treatment recommendations can be tripped up by nonclinical information in patient messages, like typos, extra white space, missing gender markers, or the …

Typos and slang in patient messages can trip up AI models, leading to inconsistent medical recommendations