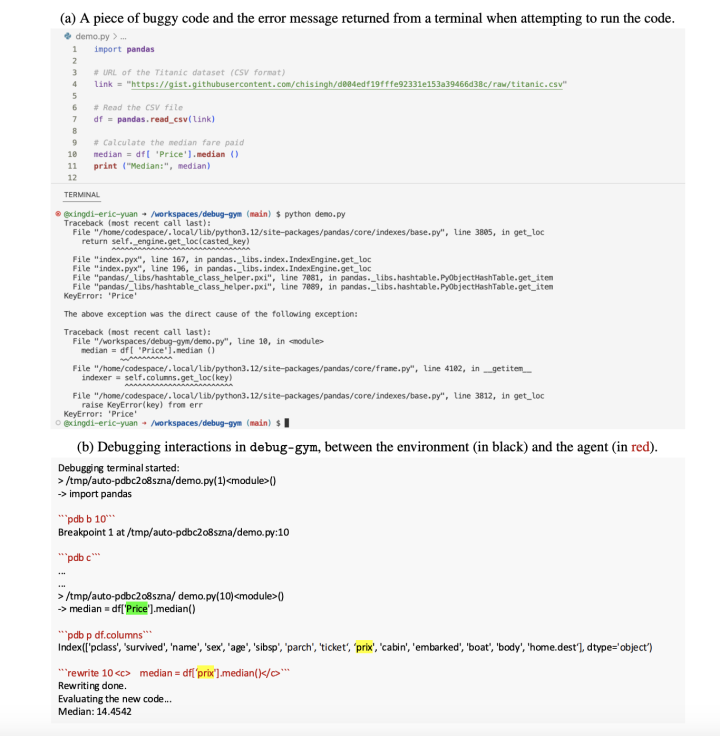

The Debugging Problem in AI Coding Tools Despite significant progress in code generation and completion, AI coding tools continue to face challenges in debugging—an integral part of software development. While large language models (LLMs) can generate code snippets and occasionally offer fixes, they often falter when addressing runtime errors or navigating through logical faults using traditional debugging tools. Human developers routinely rely on interactive debuggers like Python’s pdb to inspect variables, trace execution, and understand program flow. These tools facilitate exploratory reasoning—a dimension largely absent from the capabilities of current LLMs. This gap highlights a fundamental limitation: most LLMs operate

Can LLMs Debug Like Humans? Microsoft Introduces Debug-Gym for AI Coding Agents