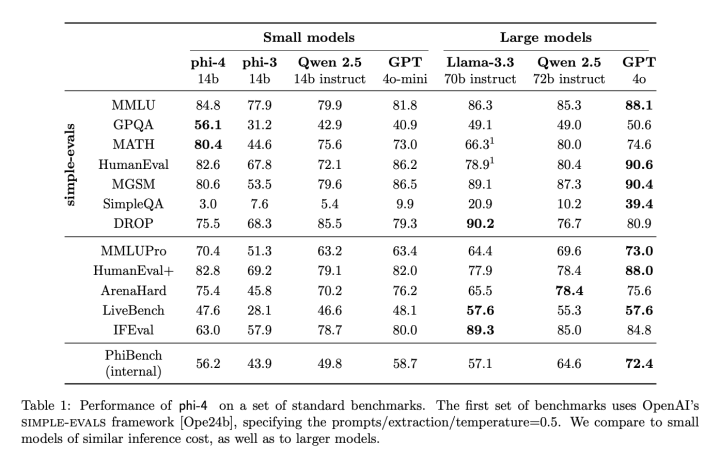

Large language models have made impressive strides in understanding natural language, solving programming tasks, and tackling reasoning challenges. However, their high computational costs and dependence on large-scale datasets bring their own set of problems. Many of these datasets lack the variety and depth needed for complex reasoning, while issues like data contamination can compromise evaluation accuracy. These challenges call for smaller, more efficient models that can handle advanced problem-solving without sacrificing accessibility or reliability. To address these challenges, Microsoft Research has developed Phi-4, a 14-billion parameter language model that excels in reasoning tasks while being resource-efficient. Building on the Phi

Microsoft AI Introduces Phi-4: A New 14 Billion Parameter Small Language Model Specializing in Complex Reasoning