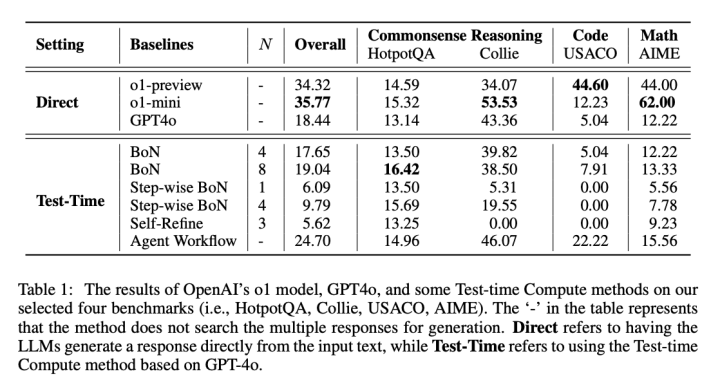

Large language models (LLMs) have significantly advanced handling of complex tasks like mathematics, coding, and commonsense reasoning. However, improving the reasoning capabilities of these models remains a challenge. Researchers have traditionally focused on increasing the number of model parameters, but this approach has yet to hit a bottleneck, yielding diminishing returns and increasing computational costs. As a result, a growing need exists to explore more efficient ways to enhance reasoning without relying solely on scaling up models. The focus is shifting toward understanding and optimizing the patterns these models use to perform reasoning tasks. A major problem facing LLM development

A Comprehensive Comparative Study on the Reasoning Patterns of OpenAI's o1 Model Across Mathematical, Coding, and Commonsense Reasoning Tasks